It’s been almost a year since OpenAI launched ChatGPT.

In that time, it’s had a lot of challenges thrown its way. I had also been looking for a way to test it out, to see what it could do for UX writing in healthtech.

And I came up with the perfect project.

I was inspired after logging into the patient portal for my primary care provider. Sometimes the language was pretty densely laden with healthcare jargon and legalese. I thought, surely there’s a way to make this easier to understand. ChatGPT should be able to help with this.

Here’s the story.

The ‘why’ behind the project

The recommended readability level for health information is 6th grade or below. However, in practice, the average readability level is above grade 10.

A patient portal, though… that’s kind of different from ‘health information’, right?

Patient portals are like frame for personal health information. They’re where people go to understand their personal health care options and action steps. It’s targeted to the same audience, the content should be just as easy to read and understand.

Goal

I aimed to improve the readability score of selections of copy found throughout the patient portal website to within the level recommended for health information.

I limited my scope to revising copy in-place, without making changes to the UX, UI, or information architecture.

Limitations

Adding a layer of complexity is the dense language from health, business, digital, and legal influences. Certain key words and phrases can’t be changed, for liability or for lack of simpler alternative terminology.

For this project, I didn’t know the specific constraints around language. I could identify some. More importantly, I could speculate as to why certain language was used. That guided my work in dissecting the copy, discovering what was flexible to change.

The process

I picked out excerpts to edit based on how complex they seemed at a glance. My main red flags were:

- long, complex sentences and words

- the complexity of the concept being communicated

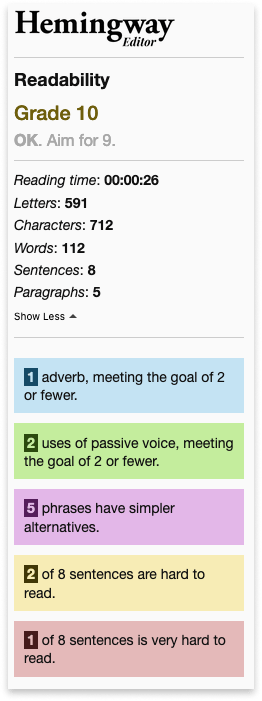

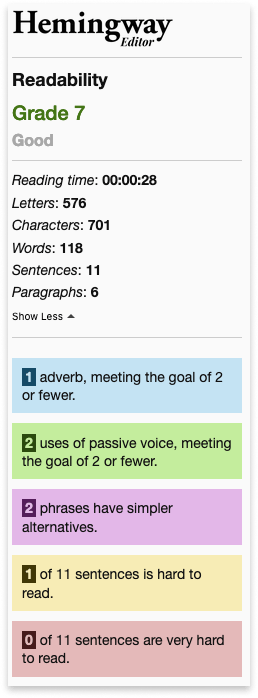

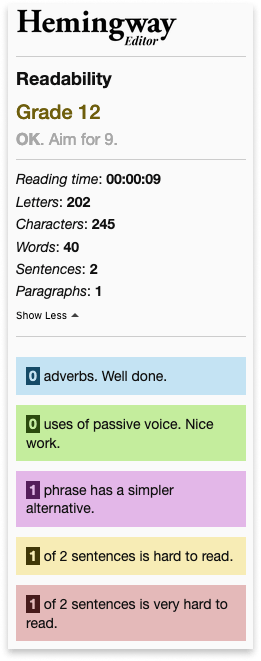

For a quick readability check I plugged the suspect text into Hemmingway App. I focused on excerpts ranked at grade 10 or above in this tool.

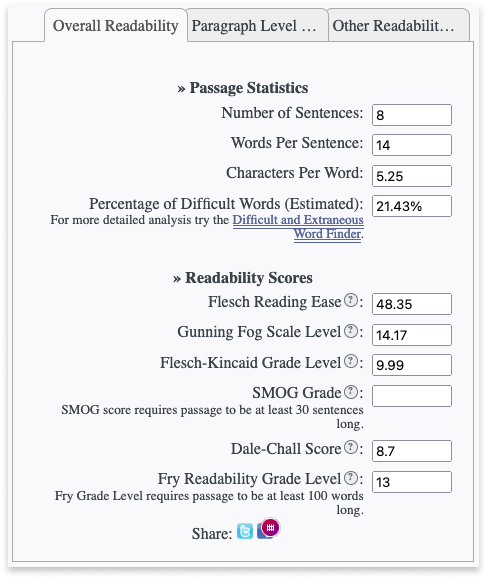

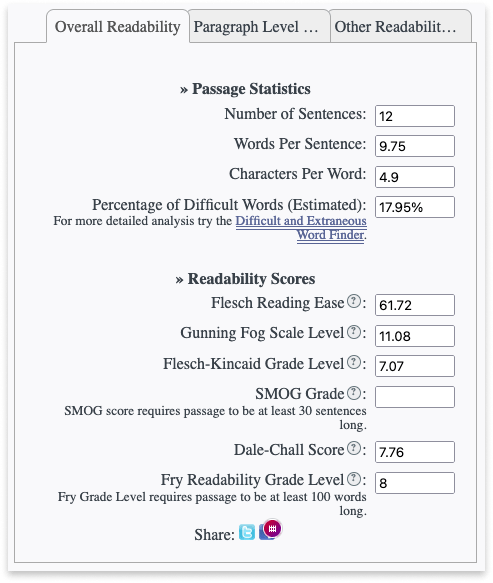

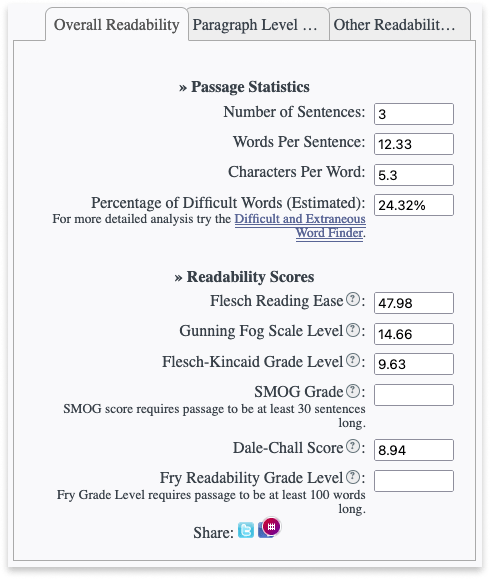

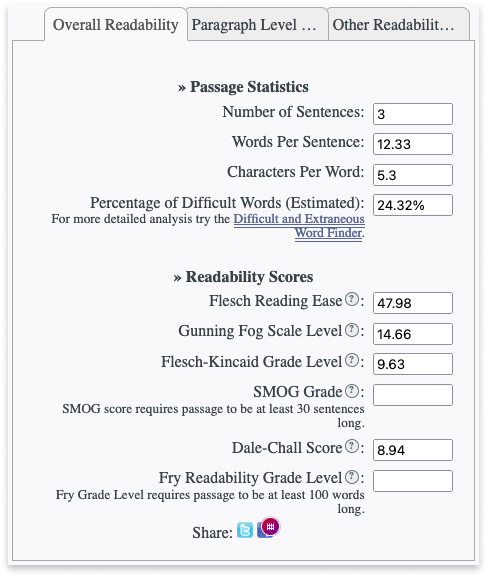

If it met that criteria. I then would put it into Datayse Readability Analyzer for additional readability metrics.

Before involving ChatGPT, I took a first crack at an edit. This helped me understand the message and the intent behind it.

Then I’d plug the original text into ChatGPT, asking a variation on “can you make this into a 6th grade reading level?”

Between ChatGPT and my own crafting, I would whittle down the copy into it’s base ideas expressed simply.

Implementation

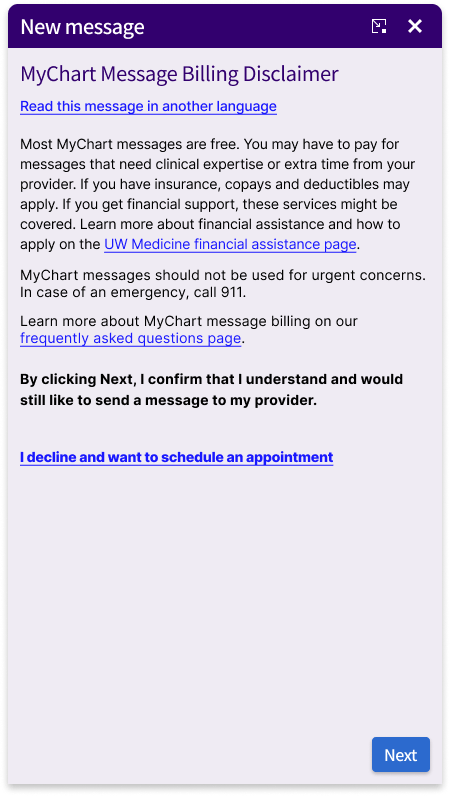

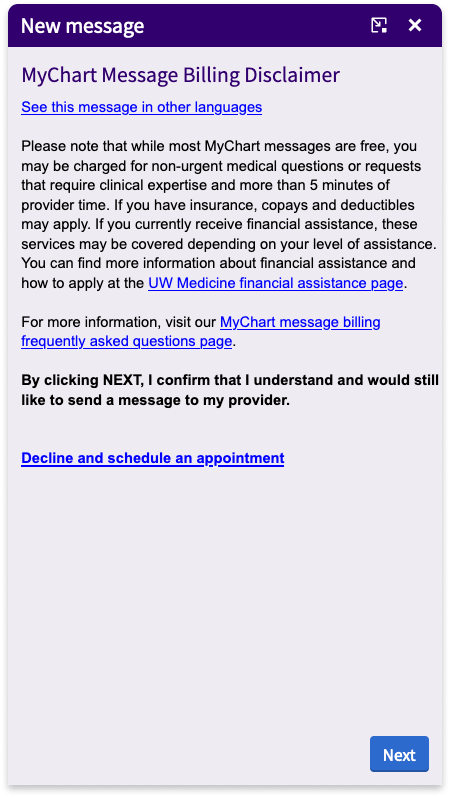

Example 1: Disclaimer

In this first example, despite some limitations ChatGPT was useful in rewriting this disclaimer:

This is a notice about how messages sent through the patient portal might be charged to the patient. Reading carefully, there’s a lot to notice about how the language is carefully crafted around the influences of many stakeholders behind this disclaimer:

- Providers: “requests that require clinical expertise and more than 5 minutes”

- Business: “If you have insurance, copays and deductibles may apply.”

- Patient support: “For more information visit our… frequently asked questions page”

- Product: “Find more information… and how to apply at the XX page”

- Legal: “non-urgent medical questions”, “I confirm that I understand”, “Decline”

It’s a tricky bit of copy to write, which is likely why the readability score is high.

By Hemmingway App, it ranked at a grade 10 readability level. This difficulty level was confirmed by the Datayze Readability Analyzer.

This was the one instance I actually started with ChatGPT. I gave it the main paragraph in the disclaimer, to which it returned:

“Some MyChart messages are free, but you might have to pay for medical questions that are not urgent or need a lot of time from the doctor. If you have insurance, you may need to pay copays and deductibles. If you get financial help, these services might be covered. Learn more about financial help and how to apply on the UW Medicine website.”

This gave me a direction. Mostly, it showed me what could be changed, and what couldn’t.

I ran with this as a foundation for 5 more iterations of my own, with this as the final copy:

Together with rest of the body text, the readability level of this excerpt dropped to a grade 7.

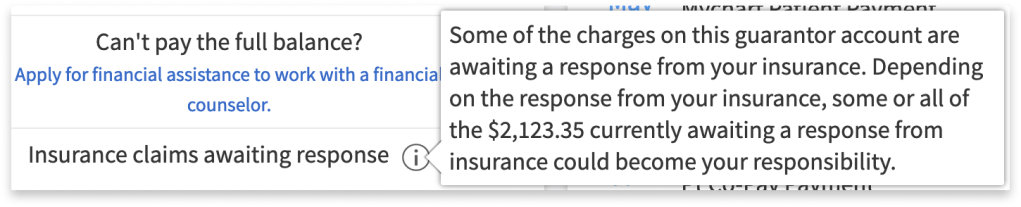

Example #2: Infotip

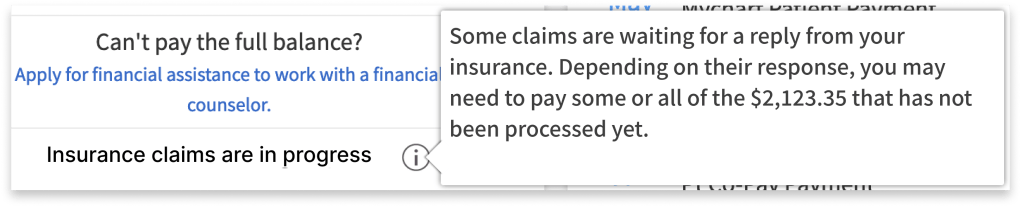

An example of when ChatGPT was not especially useful was editing this infotip:

This infotip ranked at a grade level of 12, per Hemmingway App.

Datayze confirmed the difficulty of the excerpt with it’s own various metrics. Cumulatively, the metrics skewed toward a college level readability.

Datayze confirmed the difficulty of the excerpt with it’s own various metrics. Cumulatively, the metrics skewed toward a college level readability.

Right away I could knock it down to a grade 7 by clearing out jargon (”guarantor account”) and removing repetitive phrases (”response from your insurance”), and rephrasing to pare down filler words. Within 2 iterations, I had a shorter, clearer message:

“Your insurance is still processing some services. Depending on how they respond, you may be responsible for some or all of the $2,123.35 that are not done being processed.”

Then I gave the original copy into ChatGPT, which returned:

“Some of the charges on this account are waiting for a reply from your insurance. Depending on what your insurance says, you might need to pay some or all of the $2,123.35 that’s currently waiting for their response.”

Not especially helpful.

It repeated a lot of the issues that currently existed. The one thing I did gain was that the concept of “being responsible for charges” could be unpacked. I incorporated this into the final draft:

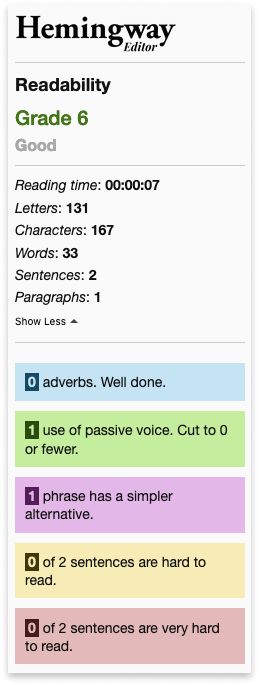

This was enough to just tip the readability level to grade 6 in Hemmingway App, reflected by the overall improved readability scoring in Datayze Readability Analyzer.

Outcome

I did not meet my goal for the readability level. But I got pretty close. Of six selections of copy that had a readability rank of 10th grade or above, I revised five to a 7th grade level and one to 6th grade level. I am confident the key messages remained in-tact, despite working around the limitations of specialized language.

Take-aways

While I ended up writing the bulk of the revised content myself, ChatGPT was a great tool. It didn’t give a 1:1 alternative excerpt copy. But what it returned was useful in what it implied about the content. From it’s response, I could figure out which concepts were in their most basic state already, which could be broken down further, and suggestions that helped me get the text closer to the overall goal.

Where ChatGPT failed was context.

At times it would return copy that erased key phrases which I imagine were required by the provider’s legal team. Healthtech is going to have a lot of these.

Other times the way it broke down concepts became too basic and too wordy, lengthening the block of text beyond the space available.

And there were also situations where ChatGPT didn’t have enough information about the intent and UX of the page. When this happened, it would spit out instructions that didn’t make sense.

What next?

ChatGPT is powerful new tool. And all new tools take practice to wield well. There’s skill in crafting prompts, feeding parameters, and reading into the response for what and how it understands.

I am excited to bring it into my work, hone my skills, and use it to improve the quality of content for user’s experiences in healthtech.

Interested in partnering? Let’s talk to see how I can help you create a comfortable digital health experience. Drop me a note at evy.haan@gmail.com.

Photo by Alex Knight on Unsplash